A New Science of the Artificial? Revisiting Herbert Simon in the Algorithmic Era

Herbert Simon, the only individual who received a Turing Award and a Nobel in Economics is as relevant now as when he wrote the path breaking "Sciences of the Artificial"

Artificial Intelligence (AI) is ubiquitous in today’s life. The evolution of AI and computing from technology enabling routine labor substitution to tasks that require reasoning and perception[i] (such as speech recognition and medical diagnostics) has completely transformed our world and with it is an imperative to comprehend the ethical and socio-technical implications of AI. In popular discourse, AI has been presented as a sort of magical transformation that can transform our lives and usher in miraculous improvements in well-being[ii] or alternately a dystopian universe where our human selves become reduced to mindless automatons[iii].

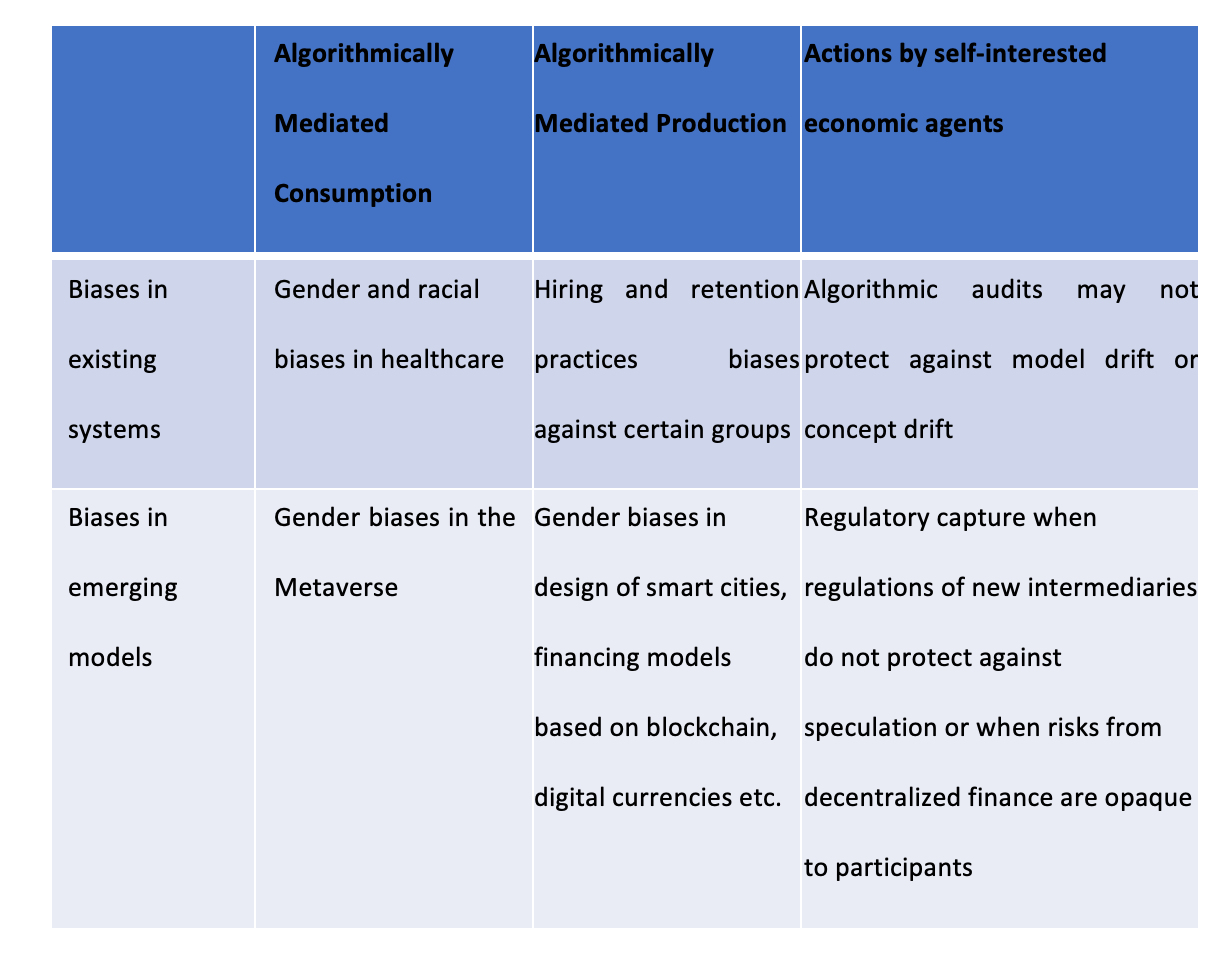

Across various industries, and among regulators and consumers, there is a growing consensus that issues of ethics and fairness in AI are immediate and important. There have been high profile examples where healthcare organizations are facing scrutiny over biased algorithms that recommend different standards of care for different groups of patients[iv] and hiring and lending practices that could potentially discriminate against women and minorities[v]. Standards for inclusive and equitable AI, while sorely needed, need to be complemented by an understanding of the evolution of socio-technical systems. First, we need to recognize there is a fundamental transformation of work and society enabled by AI. Assessments of bias and equity in AI need to consider not just the inequities in current generations of technology, but inequities in emerging models of techno-economic activity. Second, any assessment of bias and fairness in AI needs to encompass the motivations and incentives of self-interested economic agents and design choices by firms, governments and individuals.

To characterize the differences between different types of biases that we can encounter with AI, I distinguish between algorithmically mediated consumption and algorithmically mediated production. The former occurs when recommendation algorithms that influence what news articles we read, for example, while the latter occurs when algorithms that assign work in an Amazon fulfilment center or algorithms used to make ride shares in the gig economy are not transparent to workers, whose livelihood and means of living may be at mercy of opaque and inequitable management by algorithm. At the same time, newer models of economic organization may be gamed by self-interested actors. In complexity theory, it is axiomatic that complex adaptive systems “have a large numbers of components, often called agents, that interact and adapt or learn[vi].” The phenomenon of emergence refers to the property by which actions by an agent in a complex adaptive system exhibit newer behaviors or properties when multiple aspects of a system interact with each other. When the means of production and means of consumption are both algorithmically mediated, and our understanding of the world around us and our notion of the self is itself an artifact of the algorithms around us, humans as well as the institutions will conceptualize newer ways of synthesizing the socio-technical environment we are part of through the process of emergence.

Herbert Simon in his book “the Sciences of the Artificial[vii]” distinguished between natural phenomenon and artificial phenomenon, such as technological artifacts, a category that encompasses AI systems. Herbert Simon defined an artifact as “an "interface" in today's terms between an "inner" environment, the substance and organization of the artifact itself, and an ''outer" environment, the surroundings in which it operates.” AI has evolved from Herbert Simon and Allen Newell’s classification of thinking machines[viii] and a framework to understanding human intuition to the era of large language models[ix], dubbed foundation models, powering large scale automation of perceptual and reasoning tasks. With large language models (LLM) such as GPT-3, there arise issues of intentionality and goal-seeking in machine cognition. In recent years, there has emerged a scientific field of study that looks at machine behavior[x] including the technical, legal and institutional constraints on intelligent machines, not as engineering artefacts, but as a class of actors with particular behavioral patterns and ecology. Related to machine cognition should be the realization that human beings and organizations are complex adaptive systems and interfaces (to borrow Herbert Simon’s term) who actively engage with and respond to incentives in the environment. Academics have highlighted that our theories of human behavior were not developed with the deep societal reach and capabilities of current generations of algorithms[xi]. Understanding how AI is interwoven with human agency and societal institutions is not merely a matter of academic wrangling, but one that concerns all of us whose lives are shaped by algorithms.

I will sketch out three aspects by which AI and automated decision making necessitate newer ways of thinking, both from academics and practitioners[xii]. Three interrelated aspects of technology have long been a feature of research in business and economics: studying the adoption and usage of technology, studying managerial cognition through technology, and finally the study of the role of technology in firm organization. Each of these should be considered vast areas of thought, encompassing multiple disciplines and philosophical streams. I will attempt to provide a very short synopsis of each of these very extensive areas.

Adoption and Usage of Information Technology

Researchers as well as practitioners have long grappled with why and how humans use information technological artifacts. I summarize a few differences between what prior theories have studied and how artifacts change in the algorithmic era.

Managerial cognition and sensemaking

The field of organizational studies grapples with issues of managerial decision makers understand the environment they operate in, and how they employ technology to help navigate this change. For instance, how do the boundaries of managerial cognition and managerial sense-making change when AI impacts the acquisition and interpretation of unique human knowledge? How does the process by which AI learn from human behavior over time supplant or engender human behaviors and human prejudices? Managers may find it hard to react to unexpected changes in day-to-day conditions, be it spikes in demand or capacity utilization problems. However, the discretization of transactions, decoupling between different aspects of production and algorithmic enforcement of decisions also ends up disconnecting the inputs to decisions traditionally made by managers (such as localized capacity planning and demand planning) and the job descriptions of front line workers (warehouse pickers in the case of Amazon). Organizations traditionally had well developed organizational architecture and design choices including planning, rules, programs, and procedures that formally designate roles and institutionalize interactions between partners that lessen the challenges of aligning actions across independent agents. Traditionally, the problem was that manager faces decisions not only to maximize the use of this information but also to create organizational processes such as decision rights that leverage information visibility. However, such decisions are taken away from managers with algorithmic enforcement. The question is what new methods of control and coordination supplant and extend the algorithmic enforcement of discretized tasks within an organization.

Information and the locus of control

Economic theories have extensively studied the process by which organizations assign control structures to deal with external uncertainty. The Internet has made information integration across firms technically and economically feasible, and indeed firms have achieved high levels of transparency and virtual integration across the supply chain, the ability to react to actual demand patterns in real time and to realize operational efficiencies in matching supply with demand. The real-time visibility across the supply chain has enabled companies to orchestrate operations from networks of suppliers and contract manufacturers and benefit from access to their specialized capabilities. The IT-enabled vertical disintegration of firms led to renewed interest amongst academics and supply chain practitioners alike about interdependence and resilience of decisions normally conducted within a firm, such as production planning and tactical decision-making, that now should be conducted in conjunction with supply chain partners.

In a seminal paper, Ronald Coase[xiii] questioned why firms exist at all given that “production could be carried on without any organization at all”, and given that “the price mechanism should give the most efficient result.” Coase suggested that firms exist because they reduce transaction costs, such as search and information costs, bargaining and enforcement costs. Why then isn’t all production carried on by a single big firm? Coase gave two main reasons. “First, as a firm gets larger, there may be decreasing returns to the entrepreneur function, that is, the costs of organizing additional transactions within the firm may rise… Secondly…as the transactions which are organized increase, the entrepreneur…fails to make the best use of the factors of production.” However, both these costs are significantly different in the algorithmic era. We are now witnessing algorithmically mediated production systems encompassing a range of organizational forms from meta platforms to open innovation. Moreover, smart contracts and discretization of transactions has the further potential to unbundle existing modes of firm organization, could enable Yelp like reputation services[xiv] to function alongside micro transactions traditionally performed within firms.

[i] Kambhampati (2021). Polanyi's Revenge and AI's New Romance with Tacit Knowledge, retrieved from

https://cacm.acm.org/magazines/2021/2/250077-polanyis-revenge-and-ais-new-romance-with-tacit-knowledge/fulltext

[ii] Kai Fu Lee “How AI can save our humanity,” retrieved from

[iii] “Raging robots, hapless humans: the AI dystopia” review of Stuart Russell’s Human Compatible

https://www.nature.com/articles/d41586-019-02939-0

[iv] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6875681/

[v] https://www.marketwatch.com/story/do-ai-powered-lending-algorithms-silently-discriminate-this-initiative-aims-to-find-out-11637246524

[vi]https://deepblue.lib.umich.edu/bitstream/handle/2027.42/41486/11424_2006_Article_1.pdf

[vii] Herbert Simon, “Sciences of the Artificial,” MIT Press, Cambridge, 1969.https://mitpress.mit.edu/books/sciences-artificial

[viii] Heuristic Problem Solving: The Next Advance in Operations Research, Herbert A. Simon and Allen Newell

Operations Research, Vol. 6, No. 1 (Jan. - Feb., 1958), pp. 1-10. Retrieved from https://www.jstor.org/stable/167397

[ix] On the Opportunities and Risks of Foundation Models, retrieved from https://arxiv.org/pdf/2108.07258.pdf

[x] Awad, E. et al. The Moral Machine experiment. Nature 563, 59–64 (2018).

https://www.nature.com/articles/s41586-018-0637-6

[xi] Wagner, C., Strohmaier, M., Olteanu, A. et al. Measuring algorithmically infused societies. Nature 595, 197–204 (2021). https://doi.org/10.1038/s41586-021-03666-1

[xii] Human cognition and the AI revolution retrieved from https://pubmed.ncbi.nlm.nih.gov/31165494/

[xiii] https://onlinelibrary.wiley.com/doi/full/10.1111/j.1468-0335.1937.tb00002.x

[xiv] https://hbr.org/2013/10/consulting-on-the-cusp-of-disruption

Anjana, this is a fantastic article. You make excellent connections to economic theory and our current understanding of technology adoption and use.